Data hoarding is a major risk for

facility operators

Would you rather lose all your clothes than delete that outdated maintenance report from your computer, even if you know there are probably other copies on your company’s server and even on your own machine?

You’re not alone.

A 2016 research study by Veritas Technologies found that employees would rather give up all their clothes and work on weekends than purge data—even if they know that it’s junk or, worse, potentially harmful to themselves or the company.

Data hoarding is widespread, and has a major impact on business performance. What’s the problem, why does it happen, and how can you fix it?

The trouble with too much data

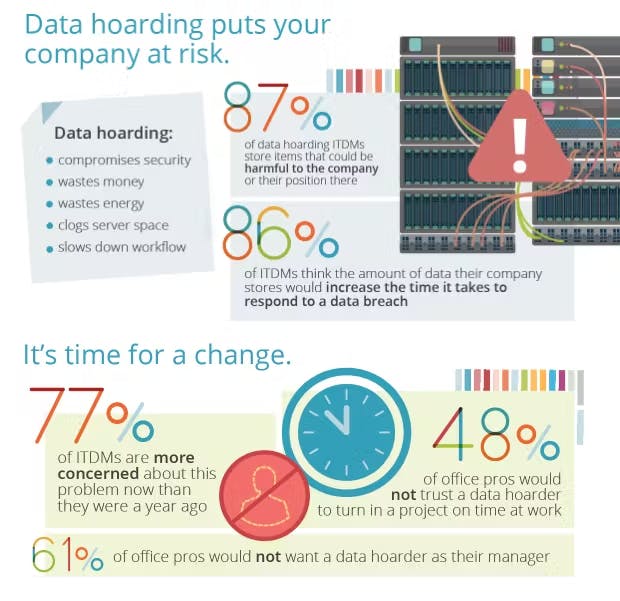

Accumulating too much data poses risks that facility operators pursuing digital transformation need to seriously consider. Although cloud storage is relatively cheap, there are other factors that could inject hidden costs to your data management efforts, such as:

- Decisions made based on bad information

The analysis leading up to big business decisions is typically executed in parts by distributed teams. If members aren’t working off of a single version of truth, it’s very likely that they will use different versions of input data. This could result in a decision that’s catastrophically wrong—like submitting a bid with an outdated price list. - Wasted resources

It’s costly to track, manage, and secure data that is duplicated or no longer useful—Veritas estimated that it would amount to USD 891 billion globally in 2020. - Higher security risks

The more data you have, the more difficult it is to protect it and respond if there is a data breach. This is exacerbated by COVID-19 forcing employees to work remotely. Additionally, 83% of IT decision makers and 62% of office professionals admitted to retaining data that was potentially damaging to both themselves and their employers (Veritas). Such data included unencrypted personnel files and even company secrets. Personal data stored on company systems by employees themselves may even result in GDPR non-compliance, risking heavy fines of up to USD 22.3 million.

> Security tip: Pay close attention to your backup data.

Many organizations fall short when it comes to backdoor security. Front ends are typically well guarded, but backup data is often stored in poorly protected disaster recovery systems or other less-than-ideal environments. If you’re storing your backups in the cloud, here are some common security issues and corresponding solutions.

The root of the problem

Why are employees so afraid to delete data, despite the risks with overaccumulation? 47% of respondents to the study feared they would need the data again, and 43% were unsure which files were safe to delete. In a nutshell: That information was ridiculously hard to find.

Three main factors lead to problems finding information.

- Silos

Division of labour, or breaking organizations down into departments and teams, makes economic sense. However, attempting to divide data along the same lines is unsustainable. It creates silos that require swathes of information to be replicated for each one, along with appropriate user permissions, versioning, and so forth. With all of that overhead, it’s unsurprising that many people can’t access the information they need. - Processes

When a report is being prepared by a team, is it prepared in Word or a co-authoring application like Google Docs? When you distribute that report, do you email it to 20 people, or post it in a collaboration application like Slack? The first option in each question typically produces multiple versions. This is quite well known, but it’s difficult to change ingrained processes. - Broken search

Enterprise search technology in most organizations is outdated. It relies on exact keywords, manually input document-based metadata, and file system hierarchies to categorize and find information. It’s light-years behind what you’re used to when using any Internet search engine like Google or Bing. Because data is difficult to find, people tend to create copies and reorganize according to their own systems.

Fast fixes

Changing organizational structure and processes can take years. Fortunately, two actions can alleviate the impact of data hoarding quickly.

The first is clear: Reduce your data.

If you’re dealing with more than a few hundred documents, this exercise could also take years if following a purely manual process. Instead, use Artificial Intelligence to effectively reduce your data repos:

- Remove duplicates

- Find the latest revisions

- Develop relationships—connect source documents (for example, Word or CAD files) to their corresponding PDFs, find FMEAs and other documents that relate to a particular P&ID, etc.

- Classify/categorize information - gain a better idea of what data you have in hand, and be able to find information faster

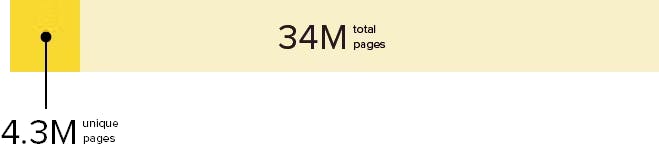

In a recent data cleanup and organization exercise for an FPSO, the Cenozai team successfully helped the client whittle 2.82 million documents down to 960,000 unique documents—a 66% reduction in documents just by removing duplicates alone. However, looking at information solely on a document level is not sufficient. It’s critical to drill down to the page level, because pages within a document may have duplicates existing elsewhere, and more often than not, few pages in huge documents (like vendor documents) are actually critical to facility operations.

Here was the story for our client at the page level:

- 2.82 million documents contained 34 million pages.

- Only 4.3 million pages, or 12.5% were unique.

Second: Upgrade your enterprise search software.

Graph-based search technology and AI, the same tech that makes Google Search so powerful, enables facility operators to remove silos and connect data across formats, from streaming sensor data to unstructured documents, and ultimately achieve a single version of truth. It empowers faster, more complete decision making, while minimizing waste, rework, and redundancy. To succeed in Industry 4.0, improving the information search experience for your organization should be a critical component of your digital transformation plan.

Garbage in, garbage out

Cleaning up your data repos and putting criteria in place for end users to understand what data they should maintain will significantly improve efficiency and decision making; cut data management costs; and reduce security risks. As any professional in the computer science domain knows: Garbage in, garbage out. Your insights will only be as good as your data quality—if it’s too noisy, you might draw incorrect conclusions. Minimize company data hoarding, and you can increase trust in your data and processes.

Recommended Posts

8/15/2024

A new era of enterprise search for facilities

(Part II): Data preparation

Before implementing new search technology, data cleanup and preparation is critical. Here's what you need to do.

8/1/2024

A new era of enterprise search for facilities

Enterprise search is changing for facility operators—out with the file hierarchies and in with the information networks.

12/16/2022

Industry 4.0: The Unstructured Data Perspective

How to build a contextual platform that will be the launching pad for every one of your Industry 4.0 initiatives.